Facebook fail ineffective monitoring & heavy-handed censorship

I had the strangest encounter with the gods of Facebook the day after Christmas 2021; first, they told me that they removed something I posted way back in early November because “Your post goes against our Community Standards on dangerous individuals and organizations,” informing me that “No one else can see your post” and that “We have these standards to prevent and disrupt offline harm”:

“We don’t allow symbols, praise or support of dangerous individuals or organizations on Facebook.

We define dangerous as things like:

• Terrorist activity

• Organized hate or violence

• Mass or serial murder

• Human trafficking

• Criminal or harmful activity”

The message informed me that I’d be blocked for 24 hours; in fact, I was blocked for at least a few minutes, but I disagreed with the decision and I got a response noting, “You disagreed with the decision. Thanks for your feedback. We use it to make improvements on future decisions.” A few minutes later, someone reviewed the post, restored it & unblocked me, informing me, “Your post is back on Facebook. We’re sorry we got this wrong. We reviewed your post again and it does follow our Community Standards. We appreciate you taking the time to request a review. Your feedback helps us do better.” So by now, you’re probably wondering what the post was all about; it was one of my #OnThisDay posts in which I wrote,

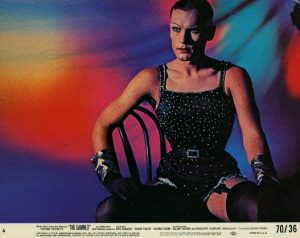

Ernst Röhm was born #OnThisDay in 1887, co-founding the Sturmabteilung (SA) that helped the Nazis to power; but Adolf Hitler ordered his assassination in 1934 in the Night of the Long Knives (Nacht der langen Messer), as dramatized in Luchino Visconti’s 1969 film, “La Caduta degli Dei” (The Damned).

I posted two images; one was a photo of a biography of Ernst Röhm. The other was an image from the Visconti film. At the end of the post, I included a Wikipedia entry about Ernst Röhm as well as a link to a scene from “The Damned.” Clearly, there was nothing in the post ‘praising’ or ‘supporting’ either of these characters or the Nazi party that established the Third Reich and the Wikipedia entry is just a straightforward account of Röhm’s life and death, while the Viscontin film is hardly a celebration of Nazism; quite the contrary, it’s a searing indictment of Adolf Hitler’s violence and treachery and the stupidity and corruption of the German industrial elite — who foolishly thought they could control Hitler and use the Nazis to ward off perceived leftist threats to Weimar-era capitalism.

Anyone who read the post would clearly see it wasn’t anything of the sort but rather a little history lesson about that period in history and I’m guessing that my post just got caught up in an automated filter that Mark Zuckerberg set up to weed out far right-wing incitement to violence such as we all witnessed at the US Capitol on Jan. 6.

Perhaps the most ridiculous aspect of this whole affair is the fact that this action was taken a full four weeks after I posted this post, by which time anyone who would have seen the original post would have already seen it, making Facebook’s removal of the post effectively irrelevant; from my own personal experience, with rare exceptions, most people see my Facebook posts on the day I post them or the next day; in a very few cases, people might see them three or four days later; but I’m not aware of anyone seeing a post a fully month after I’ve posted it and if a monitoring system doesn’t act within a day or two, that monitoring system is all but useless, especially if the intention is to stop the propagation of hate speech and incitement to violence.

Even more ridiculous was a message I got from Facebook four months later (4.27.22):

“We Had To Remove Something You Posted: There are certain kinds of posts about suicide or self-injury that we don’t allow on Facebook, because we want to promote a safe environment. You can learn more about this in our Community Standards. If you’re going through a difficult time, we want to share ways you can find support.”

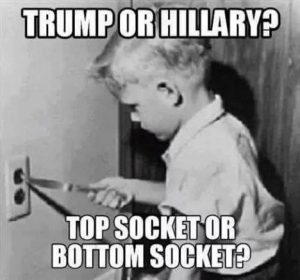

I had posted this meme a full six years before (3.14.16):

Anyone looking at this meme could tell that it has nothing to do with suicide but the humor was clearly lost on the underpaid Facebook worker (who undoubtedly is getting no benefits working from home on minimum wage) who made this decision, if it wasn’t actually simply ‘decided’ by an automated algorithm; but what’s most absurd is that Facebook removed it a full six years after I posted it; what honestly could be the point of that? It’s simply inconceivable that anyone would see it six years or probably even six days after I posted it, so aside from the fact that it couldn’t have possibly violated Facebook’s incoherent and mysterious ‘community standards,’ the notion that deleting this meme could have saved someone from contemplating suicide is laughable.

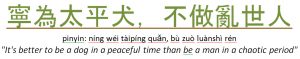

I had yet another bizarre encounter with the censors in July 2024 (7.17.24) after I posted this on my page along with this image:

“May you live in interesting times” has been cited countless times as a traditional Chinese curse but there’s no documentation of its origin in China; instead it’s an example of Orientalism via Joseph Chamberlain; beware of fake Oriental wisdom~!”

“This post may go against our Community Standards: Your post is now on Facebook, but it looks similar to other posts that were removed because they don’t follow our standards on hate speech” but it’s hard to imagine any reasonable person reading my post and concluding that it constituted ‘hate speech’: quite the contrary — it’s clearly a challenge to the subtle prejudice of Orientalism.

I posted this comment on a friend’s Facebook page in response to her criticism of Donald Trump’s plan to use tariffs: “Most Americans are as ignorant of economics as DJT; tariffs rarely work & (as you say) will be paid for by consumers; if he’s serious about using tariffs (again), it will restart an inflationary spiral,” which prompted the censors of Facebook to respond,

“This comment may go against our Community Standards

Your comment is now on Facebook, but it looks similar to other comments that were removed because they don’t follow our standards on hate speech” (11.7.24)

Your comment is now on Facebook, but it looks similar to other comments that were removed because they don’t follow our standards on hate speech” (11.7.24)

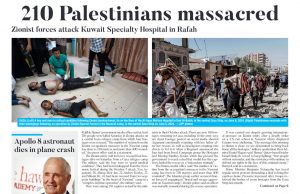

Facebook covered a photo (12.6.24) I’d posted from the Kuwait Times (6.9.24), telling me (12.6.24), “We covered your post because it may show graphic content” — but what’s the point of covering a photo I posted six months before…? This is the stupidity of Meta censorship~! Here’s what I wrote: “GenocideJoeBiden, Democrats, Republican & the US news media are all hailing the murder of 236+ innocent Palestinian civilians as ‘heroic’ as ApartheidIsrael’s #GazaGenocide garners universal praise throughout the US establishment: #Zionism is genocidal murder…”

All this is just confirmation of what I already knew and that’s been widely reported, namely, that Facebook doesn’t have the staff to monitor on-line extremist activity and the staff it has are undoubtedly overworked and underpaid; they’re basically freelancers with no benefits sitting at home, monitoring literally billions of posts for violent content; what’s frustrating is that it’s almost impossible to directly contact Facebook staff when there’s an issue or a problem like this; instead, there’s an ineffective attempt to prevent the posting of open incitement to violence on this platform. The only good news is that in this case, at least, someone responded within a few minutes to my appeal disagreeing with the initial decision and could clearly see that the content of the post wasn’t even remotely a violation of Facebook’s community standards. If anything, Facebook’s algorithm should encourage the posting of little ‘history lessons’ such as I so frequently post; if the system put in place is unable to distinguish between a history lesson about the rise of Nazism and right-wing extremist incitement to violence, then that system is clearly ineffective and the management of the Facebook staff is incompetent — a compelling argument for government regulation of this social media behemoth…